-

Posts

14047 -

Joined

-

Last visited

-

Days Won

29

Everything posted by Pocster

-

Already ahead of you . Got a usb powered fan to install

-

Don’t know ; “ Andrews a nonce “ ???

-

-

-

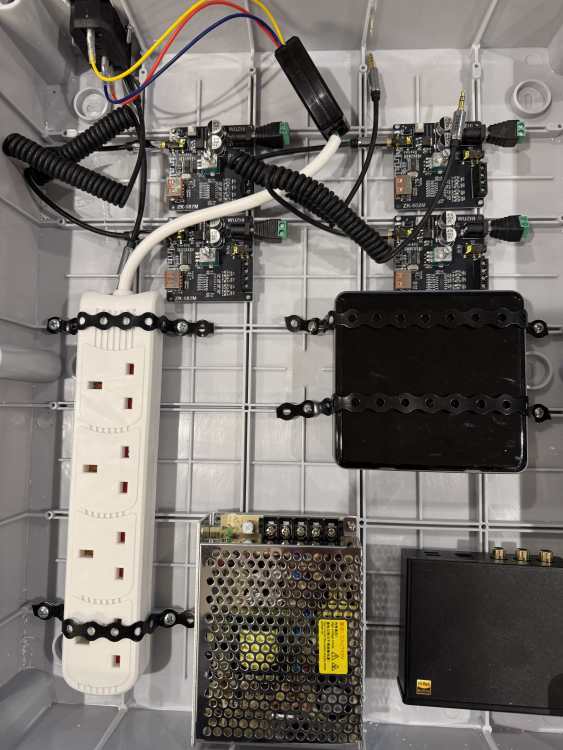

ESP32 S3 m5Stack Cores3 swmbo friendly watering system!

Pocster replied to Pocster's topic in Boffin's Corner

(expletive deleted)ing depth sensor ! It’s shite ! Or at least the beam is so wide it’s useless in a water barrel -

ESP32 S3 m5Stack Cores3 swmbo friendly watering system!

Pocster replied to Pocster's topic in Boffin's Corner

Ain’t got any . Using abs -

ESP32 S3 m5Stack Cores3 swmbo friendly watering system!

Pocster replied to Pocster's topic in Boffin's Corner

My cad skills limited -

ESP32 S3 m5Stack Cores3 swmbo friendly watering system!

Pocster replied to Pocster's topic in Boffin's Corner

No . The box is bought. The internal white support thing to house cores3 , speaker , amp etc - needs redesigning -

ESP32 S3 m5Stack Cores3 swmbo friendly watering system!

Pocster replied to Pocster's topic in Boffin's Corner

-

M5 soonish . But marginal gain ? Price hikes because of inflated ram costs ? 2nd or “ opened but never used “ m3 ultra on ebay . What do we prioritise ? ram , gpu , cpu 64gb good - but more is better for larger models newer architecture ( m4 latest ) give a performance boost at a cost gpu more cores oh ! Apple have finance options …. That’s trouble . I won’t look …. 0% finance upto 12 months , or 4.9% for longer . That’s pretty low interest . I won’t look I’m not looking Could order . Then if m5 “ better “ relative to cost could cancel I won’t look looked , ordered !

-

My other thread “ voice control “ is bollocks Anyone looked at how these work ? (expletive deleted)ing amazing ! . I mean what a LLM does engineering wise ! Been reading non stop all weekend! The possibilities are mind boggling . Local llm is going to be insane …. I’m so excited ! (expletive deleted) me ! Couple this with multiple microphone arrays . Jesus ! Stay tuned for insanity and “ Wow (expletive deleted) me “ moments . Nerds only need respond to this thread others can (expletive deleted) about with dpc and which happy meal to order .

-

Yeeessss we bloody done it.

Pocster replied to Russell griffiths's topic in General Self Build & DIY Discussion

Now you can (expletive deleted) about for another 10 yrs like me on other projects ! -

Not all plasterers are equal

Pocster replied to Pocster's topic in General Self Build & DIY Discussion

Seriously I thought he was skimming it with that deliberate non evenness - because most of the house is that style anyway . -

Swmbo’s friend ( I don’t have any myself ) asked me to look at some skimming they were having done . It’s a 1800 cottage or something - you know exposed brick , exposed timbers . I went in the lounge where the plasterer was . ” Alright mate , that looks good I like how you’ve made it look uneven to fit the character of the house “ I said His face dropped and he responded “ that’s flat mate “ I said no more . Perhaps it was the light . But I think my cocks flatter than that (expletive deleted)ing wall !

-

Yeeessss we bloody done it.

Pocster replied to Russell griffiths's topic in General Self Build & DIY Discussion

(expletive deleted) ! That’s smart mate !! -

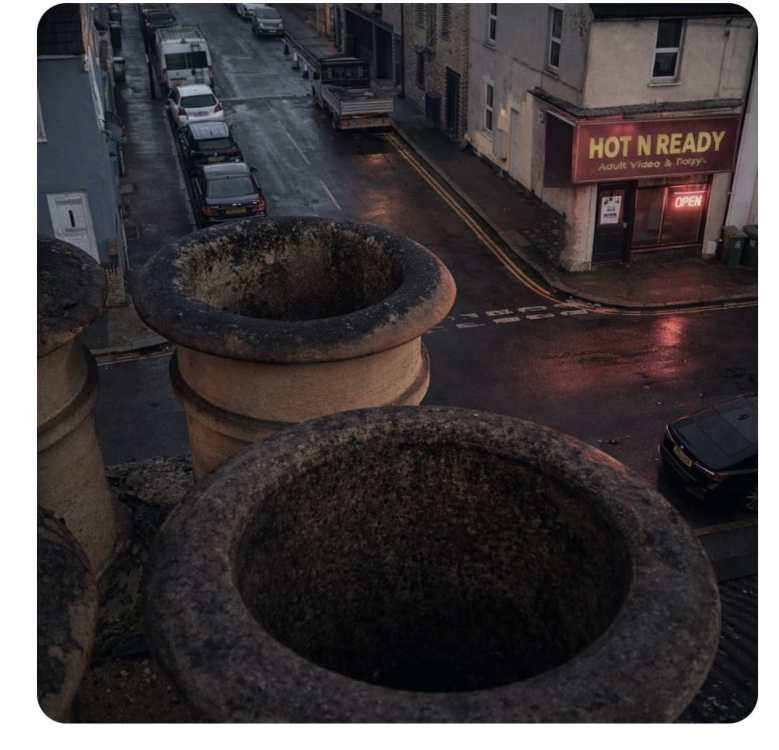

Then you shouldn’t be (expletive deleted)ing about on a chimney stack . Jump over a few streets and get it in there my man ! 💪

-

-

(expletive deleted) going up a ladder and standing on the roof

-

SWMBO away . If another ‘ box ‘ appeared in IT cupboard she would not notice 😉

-

-

I read recently that openAI said 99% use ChatGPT as a toy . Without spending a fortune on say Claude tokens chat gives the best of both worlds for me ; creativity and technical. It stuns me that after the internet and now LLM that people just don’t leverage the capabilities they have access to . Still as you say depends what layer you are at on the onion …

-

Thermostat is automation car lights come on when dark it’s everywhere - just don’t think of it . Time to take it up from HA doing a lot ( and working surprisingly well ) . Local LLM gold standard 💪

-

😂😂😂😂 . Limited vision expected from people whom clearly don’t understand the impact of local LLM and incorporating it into everything you can think of .

-

Gonna need more RAM , gonna need more GPU …